It's no secret that Google has become more active in search in recent years, especially since it reorganized significantly in 2015. On September 22, 2016, it announced the release of open source software which can detect objects and setting an image to automatically generate a caption describing it. Of course, it doesn't have the same level of creativity as human beings to create the prose in captions, but the image encoder otherwise known as Inception V3 should have caught the eye for some reason. that transcend the superficial "look at the legends he can do" motif. Software like this, in fact, can be a stepping stone to something bigger on the way to more advanced artificial intelligence.

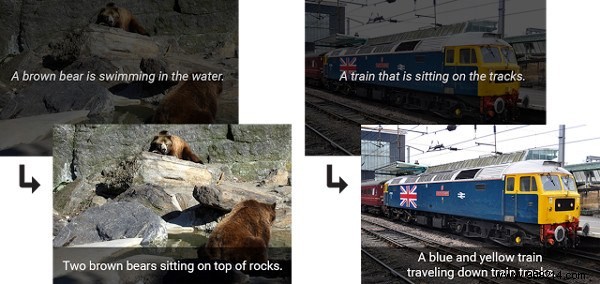

Although we generally believe that every picture is worth a thousand words, Inception V3 does not necessarily share this opinion. Automatic image captioning software has very little to say about what it sees, but it does at least have a basic concrete understanding of what is contained within the frame presented to it.

With this rudimentary information, we have taken a step towards the ability of software to understand visual stimuli. Giving a robot this kind of power would allow it to react to such stimuli, bringing its intelligence to just below the level of most basic aquatic animals. That might not seem like much, but if you take a look at how the bots currently behave (when tested outside of their very restrictive parameters), you'll find that would be a real leap in intelligence compared to how amoebic which they can perceive their own environment.

The fact that we now have software that (with 93% accuracy) can caption images means that we have somewhat overcome the hurdle of getting computers to make sense of their surroundings. Of course, that doesn't mean we're anywhere near finished in that department. It's also worth mentioning that the Inception V3 was trained by humans over time and uses the information it "learned" to decipher other images. To have a true understanding of one's environment, one must be able to reach a more abstract level of perception. Is the person in the picture angry? Are two people fighting? What is the woman on the bench crying about?

The above questions represent the kind of things we ask ourselves when we meet other human beings. It's the kind of abstract inquiry that requires us to extrapolate more information than a doohickey captioning an image can do. Let's not forget that icing on the cake, we like to call an emotional (or "irrational") reaction to what we see. That's why we consider the flowers beautiful, the sewers disgusting, and the fries tasty. It's something we're still wondering if we'll ever get to the machine level without hard-coding it. The truth is that this kind of "human" phenomenon is probably impossible without restrictive programming. Of course, that doesn't mean we won't stop trying. We are, after all, Human .

Do you think our robot overlords will ever learn to appreciate the intricacy of a rose petal under a microscope? Tell us in the comments!