You and I grew up counting from one to ten. Computers, however, count a little differently. They use a different number system, called binary, to track data. But what does it mean, and what is it for?

As a child, you were taught to count on your fingers. Ten fingers, ten numbers. To count over ten, you can hold down one finger while counting the others. This is the base of the base ten, or decimal, number system. It's the number system you use every day, and it's the basis of most people's understanding of the number world.

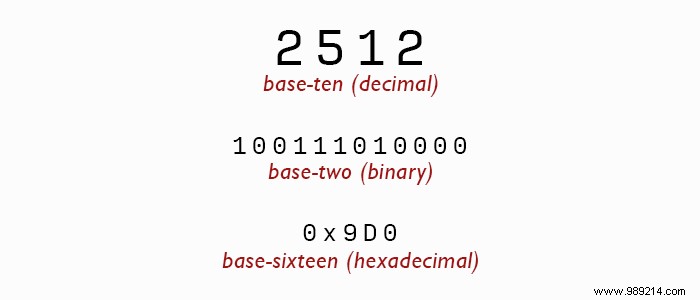

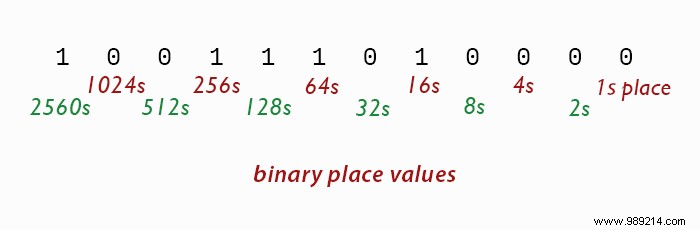

However, computers cannot use base ten. The hardware required to represent a base ten value at the hardware level would be extremely complex. Instead, computers use binary, or base two, to count. In binary, there are only two numbers:one and zero. Each "place" also has different values. The lowest place is one, then two, four, eight, sixteen and so on. The value of each place is twice the value of the previous place. To evaluate the decimal equivalent of a binary number, multiply each number by its place value and add all the results. It's basically the same thing you do when evaluating a base ten number, but you do it so quickly that you neglect the process.

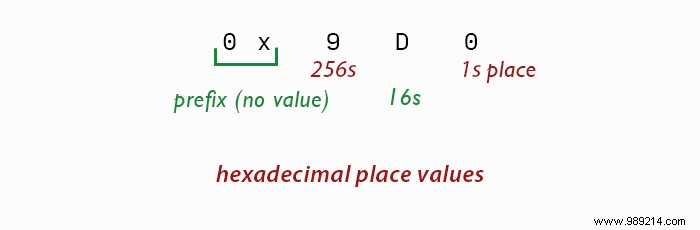

Hexadecimal is different from binary and decimal. It uses base sixteen, which means there are sixteen different digits that can appear in the same place. Since we only have ten numbers in our common language, we use the first six Latin letters (A, B, C, D, E, F) to denote the numbers 10 through 15. You can recognize this format from color codes used in web design. . When used in computing, it is often prefixed with 0x to indicate that the following string should be interpreted as a hexadecimal number. Each place value is sixteen times larger than the previous place value, starting with place.

It would certainly be handy if we could use one number system for everything. Unfortunately, each number system has its own purpose, so we're stuck using more than one.

The decimal is most familiar to human operators, and it is shared by nearly every culture on Earth. This makes it the standard counting scheme for human communication. No surprises there.

However, computers cannot count in decimals. Their circuits can represent only one of two states:ON or OFF. This makes it a natural fit for binary, which has two states:one and zero. The zero, of course, represents off, while one represents on.

Hexadecimal is more of an edge case. It is mainly used as a convenient way to represent binary values for human operators. A single hexadecimal position value represents four bits of memory. This means that two slots represent eight bits, or one byte. This is why you will see hexadecimal used to represent the value of memory registers. The bit size makes it a natural fit and it's easier to read than a string of ones and zeros.